astrossoundhell

1 month ago

astrossoundhell

1 month ago

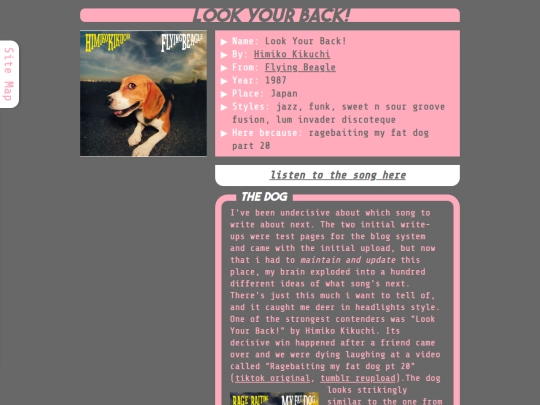

New audio log entry! For some reason git detects new filly fill renders of old unchanged fillouts as new files, so there's the screenshot of "Machine" by BUCK-TICK as the main image. Thas't not the new one. The new one is this (https://auberylis.moe/audio/posts/post3), Look Your Back! by Himiko Kikuchi

astrossoundhell

1 month ago

astrossoundhell

1 month ago

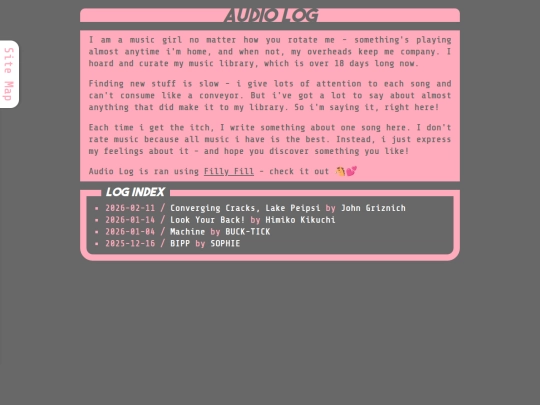

Ok, bigger-than-usual website update - i now have an audio log! I wanted to ramble about music that i like a lot for a long time, and it comes with a bonus of someone potentially discovering something cool. For now there's just two songs on there, but i plan to try and do one song a week.

astrossoundhell

1 month ago

astrossoundhell

1 month ago

Also, the thing is ran using Filly Fill, which is a templater i made. Let's see how it goes - so far the tool is definitely Not stable, but it does what it has to with the thing? Check it out here: https://gitea.com/AuberyLis/FillyFill

lydels

1 month ago

lydels

1 month ago

omg i absolutely love this section and the way you write about music!! will definitely be checking out your recommendations

astrossoundhell

1 month ago

astrossoundhell

1 month ago

@lydels aww thank you so much! you're making me blush. i was a bit on the edge about whether my music thoughts *really* need to be out there for anyone, will they be fun or useful, but this settles it ^w^

astrossoundhell

2 months ago

astrossoundhell

2 months ago

it can do nested lists and complicated structures now.. this stuff seems ideal for my split-topic subblogs system that i want to set up

One of my recent tumblr posts reads like that kind of. poetry where you don't rhyme and the rhythm is on vacation? so i formatted it to my liking and put it here. I like it. It's half tongue-in-cheek "haha look Poetry", half dead serious "i accidentally almost made myself cry by typing out what's in my head". I had some more ideas like this that i might type out someday

(for now it's unlisted in the sitemap and not linked anywhere on my site, so it's a People Checking My Profile exclusive. I'll list it once there's like... at least 3-4 thingies to look at)